Master Rate Limiting Strategies: Tools & Best Practices

- Gunashree RS

- Nov 16, 2024

- 4 min read

Rate Limiting Strategies for Developers

APIs are the backbone of modern applications, handling everything from user authentication to data fetching. As applications grow, managing API traffic becomes critical to ensure performance and reliability. This is where rate-limiting strategies come into play, safeguarding systems from overload while maintaining user satisfaction. In this article, we’ll explore the fundamentals of rate limiting, its importance for developers, and advanced strategies for implementation and testing, with tools like Devzery providing cutting-edge solutions.

Understanding Rate Limiting and Its Importance

Introduction: Why Rate Limiting is Essential for Developers

Rate limiting is the practice of controlling the number of requests an API can handle within a given time frame. Without it, APIs are vulnerable to abuse, accidental overloads, or even Distributed Denial of Service (DDoS) attacks, which can degrade performance or cause outages.

For developers and QA teams, implementing effective rate limiting ensures that critical APIs remain reliable, especially under high traffic. Devzery, with its AI-powered testing and automation tools, empowers teams to build resilient APIs that thrive in dynamic environments.

What is Rate Limiting?

Rate limiting involves setting thresholds on API usage to maintain stability. For instance, an API might allow up to 1000 requests per minute per user, throttling additional requests until the time frame resets.

Key rate-limiting techniques include:

Throttling: Gradually reducing the request rate when limits are approached.

Blocking: Rejecting requests outright once limits are exceeded.

Unlike general traffic management, rate limiting specifically focuses on controlling resource consumption at the API level. For enterprises leveraging microservices, this is especially critical, as every service depends on APIs to communicate.

Why Developers and QA Teams Need Rate Limiting Strategies

APIs are prone to overload during high traffic, impacting system performance and user experience. This is especially true for applications that rely on distributed systems, where multiple services interact dynamically.

Consequences of Poor Rate Limiting:

Slow response times.

Server crashes.

Loss of customer trust.

Testing rate limits effectively is vital. Traditional manual methods can’t replicate real-world traffic, making tools like Devzery essential. By simulating high-concurrency scenarios, these tools identify bottlenecks early, helping developers optimize rate limits.

Common Challenges in Implementing Rate Limiting

User Experience vs. Performance: Strict rate limits can frustrate users, while lenient ones can overload servers.

Testing Across Environments: Ensuring consistent behavior in staging and production environments is complex.

Scalability: Rate limits need to adapt as APIs grow and usage increases.

CI/CD Integration: Manual testing disrupts continuous delivery workflows.

Automated solutions like Devzery mitigate these challenges by integrating seamlessly into CI/CD pipelines, enabling rapid and reliable testing.

Effective Rate Limiting Strategies for Developers

Top Rate Limiting Strategies for APIs

Token Bucket Algorithm:

Assigns tokens to users; requests consume tokens until replenished.

Suitable for burst traffic scenarios.

Fixed Window Rate Limiting:

Limits requests based on fixed intervals, such as 1000 requests per minute.

Simple but prone to uneven traffic spikes.

Sliding Window Algorithm:

Tracks request over a rolling time frame for smoother traffic control.

Dynamic Rate Limiting:

Adjusts thresholds based on user roles or traffic patterns.

Example: Prioritizing premium users during peak demand.

Devzery’s tools allow developers to test these strategies efficiently by simulating various traffic scenarios.

How to Test Rate Limiting to Prevent Overload

Effective rate limiting isn’t just about setting thresholds—it requires rigorous testing to ensure reliability.

Step-by-Step Testing Guide:

Define Traffic Thresholds: Establish acceptable request limits for your APIs.

Simulate High-Concurrency Requests: Use tools to mimic real-world traffic surges.

Monitor API Behavior: Analyze response times, error rates, and server load.

Iterate and Improve: Adjust thresholds and algorithms based on test results.

Devzery automates this process, saving time and ensuring consistent testing across development cycles.

Integrating Rate Limiting Strategies into CI/CD Workflows

In modern development, CI/CD pipelines automate the testing and deployment of APIs. Rate-limiting tests must be embedded within these pipelines to ensure continuous validation.

Best Practices:

Include rate-limiting simulations in pre-deployment stages.

Automate tests to run with every code change.

Monitor results and provide actionable feedback to developers.

Devzery’s AI-powered tools integrate seamlessly into CI/CD workflows, providing real-time insights into API performance under different rate limits.

Modern Innovations in Rate-Limiting Strategies

Rate limiting has evolved to address the demands of cloud-native and AI-driven applications.

Dynamic Rate Limiting with AI: Adjust thresholds automatically based on traffic patterns and user behavior.

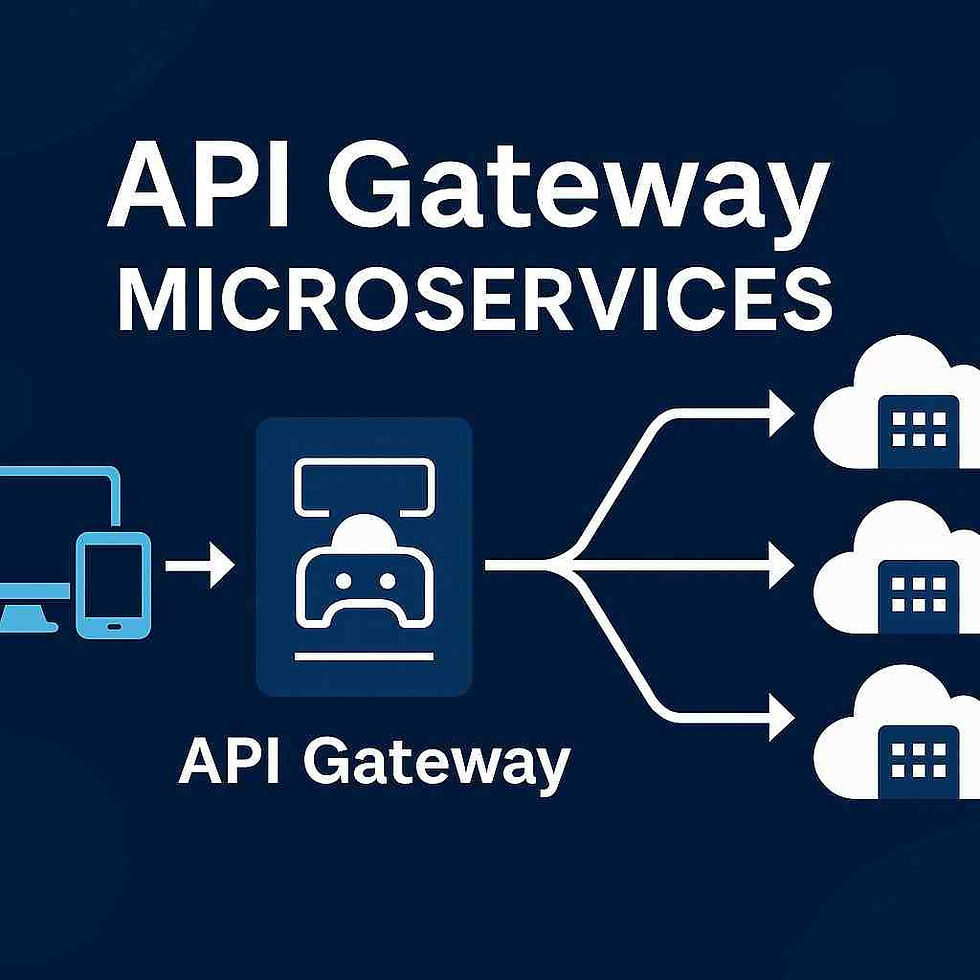

API Gateways: Tools like AWS API Gateway manage rate limits at scale, providing additional security.

Cloud-Native Solutions: Kubernetes and other platforms offer built-in support for scalable rate limiting.

Mid-to-large enterprises, especially in regions like the USA and India, benefit from these innovations. Devzery’s solutions enhance these strategies, providing developers with robust tools for testing and automation.

FAQs About Rate Limiting Strategies

1.What is the best rate-limiting algorithm for my use case?

The choice depends on your needs; the token bucket suits bursty traffic, while the sliding window offers smoother control.

2.How does rate limiting affect API scalability?

Proper rate limiting ensures stable performance as API usage grows.

3.Can rate limiting be tested in production environments?

Yes, but with caution. Simulated traffic tests are safer during staging.

Conclusion:

In today’s digital landscape, rate limiting is a cornerstone of API reliability and performance. From defining strategies to testing and deploying them, developers need modern tools to keep pace.

Devzery’s AI-powered solutions empower teams to implement and validate rate limits seamlessly, ensuring APIs remain robust under any conditions. Ready to optimize your APIs? Explore Devzery’s innovative tools today!

External Source:

Introduction to Rate Limiting - What is Rate Limiting by Cloudflare

Rate Limiting Strategies - Rate Limiting Guide by AWS

Best Practices for API Testing - API Testing Best Practices by Postman

Comments