Issues with AI: Exploring Challenges and Ethical Concerns

- Gunashree RS

- Nov 4, 2024

- 7 min read

Introduction

Artificial Intelligence (AI) has revolutionized industries by transforming workflows, improving decision-making, and enhancing customer experiences. However, despite its impressive capabilities, AI brings a host of challenges that organizations must address to harness its potential responsibly. From ethical and legal concerns to technical issues like bias, transparency, and security, these obstacles are vital considerations in an AI-driven world. This guide provides a detailed look at the key issues with AI, explores their implications across sectors, and offers actionable insights for navigating the ethical and operational complexities surrounding AI.

Top Issues with AI and How They Impact Businesses

1. Ethical Issues in AI

One of the most pressing challenges with AI is ensuring that its use aligns with ethical standards. AI-driven decisions affect sensitive areas such as health, criminal justice, and finance, where fair and transparent practices are essential. Ethical concerns in AI include:

Privacy Violations: AI systems, particularly those in surveillance, pose significant privacy risks.

Bias and Discrimination: AI models may reinforce biases present in their training data, resulting in unfair treatment.

Social Impact: Ethical AI requires balancing technological progress with respect for human rights, particularly when AI is applied in critical sectors like criminal justice.

Addressing these concerns calls for careful attention to the social implications of AI and the establishment of frameworks that prioritize fairness and accountability.

2. Bias in AI

AI bias occurs when algorithms inherit and amplify biases found in their training data. For example, if an AI system is trained on data that reflects historical biases (e.g., in hiring or lending), it can inadvertently perpetuate these biases, leading to discriminatory outcomes. Bias in AI can impact:

Hiring and Recruitment: Biased algorithms may favor certain groups, reinforcing workplace inequalities.

Law Enforcement: AI-driven surveillance and predictive policing may unfairly target marginalized communities.

Financial Services: Algorithms used for loan approvals or credit scoring can exhibit racial or gender bias, impacting financial inclusivity.

Mitigating bias requires a proactive approach to data selection, preprocessing, and algorithm design. Organizations should invest in diverse data sources and continuous monitoring to minimize bias and promote fairness.

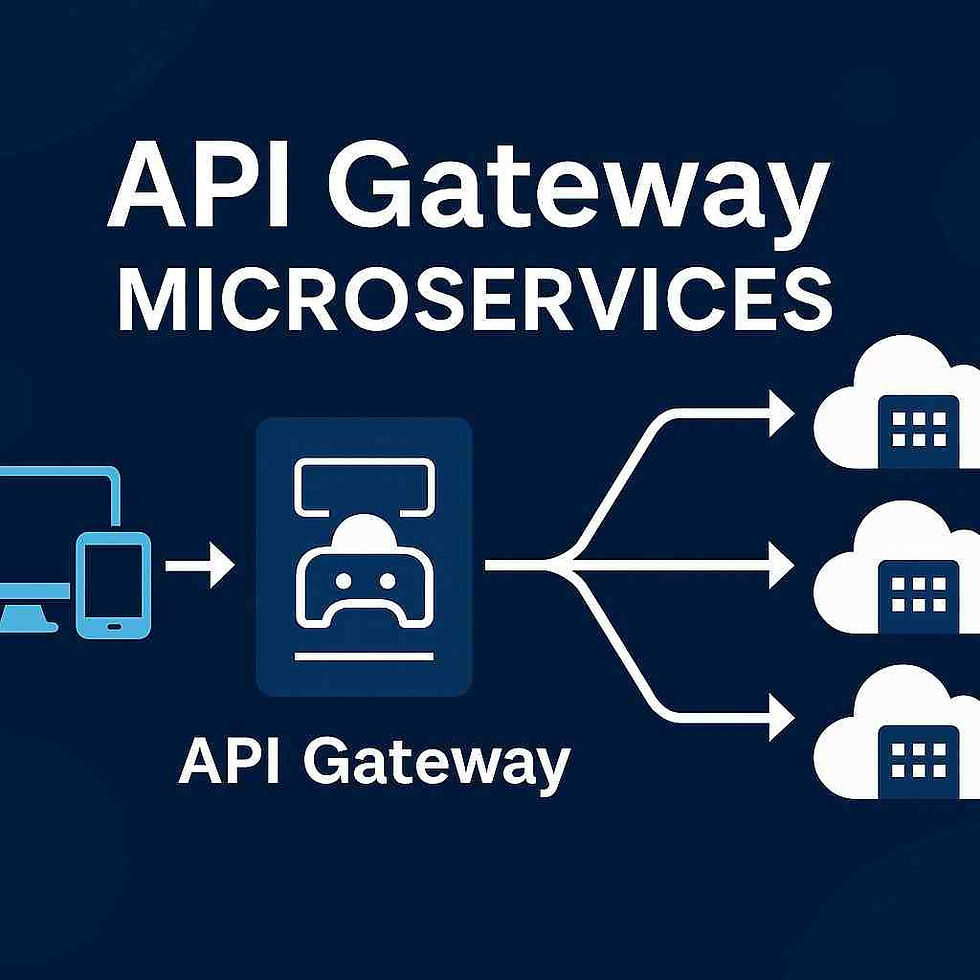

3. AI Integration Challenges

Integrating AI into existing systems is challenging, often requiring technical expertise and cross-departmental collaboration. Common issues with AI integration include:

Data Interoperability: Different systems may have unique data formats, making integration difficult.

Training and Upskilling: Employees must be trained to work alongside AI, which can require significant resources.

System Compatibility: Existing infrastructure may not be optimized for AI, resulting in compatibility issues.

A strategic integration plan with stakeholder involvement and gradual, iterative implementation can help organizations overcome these obstacles and ensure a smooth transition.

4. High Computing Power Requirements

AI systems, particularly those utilizing deep learning, require substantial computing power. Training sophisticated models demands high-performance hardware such as GPUs and TPUs, which can lead to increased costs and energy consumption. Challenges include:

Infrastructure Costs: Smaller companies may struggle to afford the necessary hardware.

Environmental Impact: High energy consumption raises concerns about sustainability.

Scalability: As AI models become more complex, computing requirements grow, making it difficult for companies to scale their AI initiatives.

Innovations like cloud computing, distributed computing, and potential breakthroughs in quantum computing may help address these challenges by providing scalable and cost-effective resources for AI development.

5. Data Privacy and Security Concerns

AI relies on large datasets, which often include sensitive information. Ensuring data privacy and security is paramount, as any breach could result in significant financial and reputational damage. Key issues include:

Data Breaches: Unauthorized access to AI-stored data can lead to identity theft or financial loss.

Data Misuse: Inadequate safeguards may lead to unethical or illegal uses of data.

Compliance with Regulations: Laws like GDPR require strict adherence to data protection standards, impacting how AI models manage personal data.

To address these concerns, organizations should employ encryption, anonymization, and privacy-preserving technologies such as differential privacy. This not only secures data but also builds trust among users.

6. Legal Challenges Surrounding AI

AI introduces complex legal questions, particularly regarding accountability and liability. Common legal issues in AI include:

Liability: Determining who is responsible when AI malfunctions or causes harm can be difficult.

Intellectual Property: Ownership of AI-generated content is a gray area, creating challenges for IP rights.

Compliance: Ensuring that AI applications comply with industry regulations is essential for avoiding legal repercussions.

Establishing clear legal frameworks and policies can help address these challenges, promoting innovation while safeguarding stakeholders’ rights. Collaboration between legal experts and technology developers is critical for creating fair and accountable AI practices.

7. Lack of AI Transparency

Transparency in AI is essential for building trust and ensuring accountability. Users and stakeholders need to understand how AI makes decisions, particularly in critical areas such as healthcare and finance. Transparency issues include:

Opaque Decision-Making: Complex models like neural networks often operate as "black boxes," making it difficult to trace how decisions are made.

Explainability Challenges: Limited transparency can lead to mistrust, especially when AI’s recommendations or actions affect people's lives.

Explainable AI (XAI) and clear documentation of model training, data sources, and performance metrics can enhance transparency, empowering stakeholders to make informed decisions based on AI outputs.

8. Limited Knowledge of AI Among Users

Misconceptions about AI’s capabilities and limitations can result in irresponsible use or unrealistic expectations. Limited knowledge about AI impacts:

Adoption: Misunderstandings can hinder adoption, as users may fear AI's impact on jobs or privacy.

Regulation: Policymakers with limited AI knowledge may struggle to create effective regulations.

Educational initiatives, accessible resources, and community engagement can bridge this knowledge gap, promoting informed use and regulation of AI.

9. Building Trust in AI

For AI adoption to be successful, users must trust that the technology is reliable, ethical, and beneficial. Trust issues in AI stem from concerns over transparency, accountability, and consistency. Building trust involves:

Transparency: Clearly communicating how AI works and disclosing data sources.

Reliability: Ensuring AI outputs are consistent and dependable.

Accountability: Taking responsibility for AI’s actions and correcting errors promptly.

By fostering transparency, ethical practices, and reliability, organizations can build trust in AI systems and encourage their widespread adoption.

10. Lack of AI Explainability

AI explainability refers to the ability to understand and trace how an AI system reaches its conclusions. Without explainability, users may distrust AI, especially in sectors where errors have significant consequences, like healthcare. Explainability challenges include:

Complexity: Many AI models are inherently complex, making them difficult to interpret.

User Distrust: Lack of clarity can lead users to question the AI’s reliability and fairness.

Implementing explainable models, especially in critical fields, enhances transparency and builds user confidence, contributing to a more trustworthy AI ecosystem.

11. Discrimination in AI

Discrimination arises when AI systems produce biased outcomes against certain groups due to historical biases in the data. Discriminatory outcomes can occur in:

Hiring Processes: Algorithms may favor certain demographics based on historical hiring data.

Loan Approvals: Biases in lending data can result in discriminatory practices against minority groups.

To reduce discrimination, organizations can apply fairness-aware machine learning techniques, promoting equitable treatment for all individuals.

12. High Expectations

The capabilities of AI are often overstated, leading to inflated expectations and subsequent disappointment. Unrealistic expectations about AI's potential can hinder its adoption. Challenges include:

Misinterpretations: Users may expect AI to solve complex problems instantly.

Investment Risk: Overhyped expectations may result in financial losses if AI does not deliver anticipated outcomes.

Educating stakeholders about AI’s realistic capabilities and limitations can help temper expectations and promote effective AI deployment.

13. Effective Implementation Strategies for AI

Successful AI implementation requires a well-defined strategy, covering aspects like data quality, model selection, and integration into existing workflows. Effective strategies involve:

Goal Alignment: Ensuring AI solutions align with business objectives.

Collaboration: Engaging both AI specialists and domain experts to create tailored solutions.

Pilot Programs: Testing AI initiatives in smaller-scale environments before full deployment.

Structured implementation strategies reduce risk and improve the likelihood of achieving desired outcomes.

14. Data Confidentiality

Data confidentiality involves protecting sensitive information from unauthorized access, a critical issue as AI systems handle more personal data. Challenges in maintaining data confidentiality include:

Data Breaches: Potential exposure of sensitive information can result in regulatory penalties and loss of trust.

Regulatory Compliance: Laws like GDPR impose strict requirements on data protection.

Strict encryption and access controls, along with adherence to data privacy laws, are essential for safeguarding data and building trust with users.

15. AI Software Malfunctions

Software malfunctions in AI systems can have serious consequences, from faulty recommendations to financial or reputational damage. Addressing malfunctions involves:

Robust Testing: Thorough testing at every stage of the software lifecycle is essential for minimizing errors.

Error Handling: Having contingency plans in place ensures malfunctions are managed with minimal disruption.

A culture of transparency and accountability can help detect and resolve software malfunctions efficiently, improving AI reliability.

Overcoming Challenges in AI

Addressing AI’s challenges requires a multifaceted approach:

Establish Ethical Standards: Develop guidelines to guide AI development and deployment.

Reduce Bias: Use diverse data sources, conduct regular audits, and apply fairness-aware algorithms.

Enhance Transparency: Utilize explainable AI to make decision-making processes more transparent.

Engage Legal Experts: Collaborate with legal professionals to stay compliant with evolving AI regulations.

Set Realistic Expectations: Communicate AI’s limitations to prevent unrealistic goals.

Implement Data Protection Measures: Ensure encryption, access control, and regulatory compliance.

Prioritize Testing and Quality Assurance: Conduct regular testing and maintain contingency plans for software malfunctions.

Conclusion

AI presents an array of exciting possibilities, but its integration and usage also bring unique challenges that must be managed with diligence and foresight. From ethical considerations and biases to issues of transparency and technical limitations, the path to responsible AI involves addressing these challenges through strategic planning, collaboration, and transparency. As AI continues to evolve, developing a thoughtful approach to its deployment will ensure organizations can leverage AI’s potential while minimizing risks and building public trust.

Key Takeaways

AI poses ethical, technical, and operational challenges that require careful attention.

Bias, transparency, and data privacy are critical concerns in responsible AI use.

Effective implementation strategies and regular testing are essential for reliable AI.

Educating users and setting realistic expectations can reduce AI-related risks.

Collaborating with legal and technical experts can help navigate regulatory requirements.

FAQs

1. How does AI impact job markets?

AI can automate routine tasks, impacting some jobs while creating new opportunities in others.

2. What are the main ethical issues in AI?

Key ethical issues include privacy violations, bias, and the social impact of AI on human rights.

3. How can AI bias be mitigated?

Mitigating AI bias requires diverse data sources, fairness-aware algorithms, and ongoing audits.

4. What is explainable AI?

Explainable AI provides insights into how AI models reach decisions, making complex systems more transparent.

5. What are the legal implications of AI use?

Legal issues involve liability, intellectual property, and regulatory compliance.

6. How does AI integration affect businesses?

AI integration challenges include data compatibility, upskilling needs, and adapting to existing workflows.

Comments