Snowflake Testing : Best Practices for Data Quality & Validation 2025

- Gunashree RS

- Jul 14, 2025

- 6 min read

What is Snowflake Testing?

Q: What exactly is Snowflake testing?

Snowflake Testing is a method to test data inside the Data Warehouse for integrity, accuracy, reliability, and consistency before feeding it to other BI tools. This systematic approach ensures that your data warehouse delivers accurate insights and prevents costly business decision errors.

Q: Why is Snowflake testing crucial for modern data teams?

Data quality issues can have severe consequences. According to industry research, poor data quality costs organizations an average of $12.9 million annually. Inconsistent data can generate false reports and negatively affect business decisions. Testing helps identify and resolve these issues before they impact downstream systems.

Q: What are the key components of Snowflake testing?

Snowflake testing encompasses several critical areas:

Data Integrity Testing - Verifying data accuracy and completeness

Schema Validation - Ensuring data structure consistency

Performance Testing - Validating query execution times

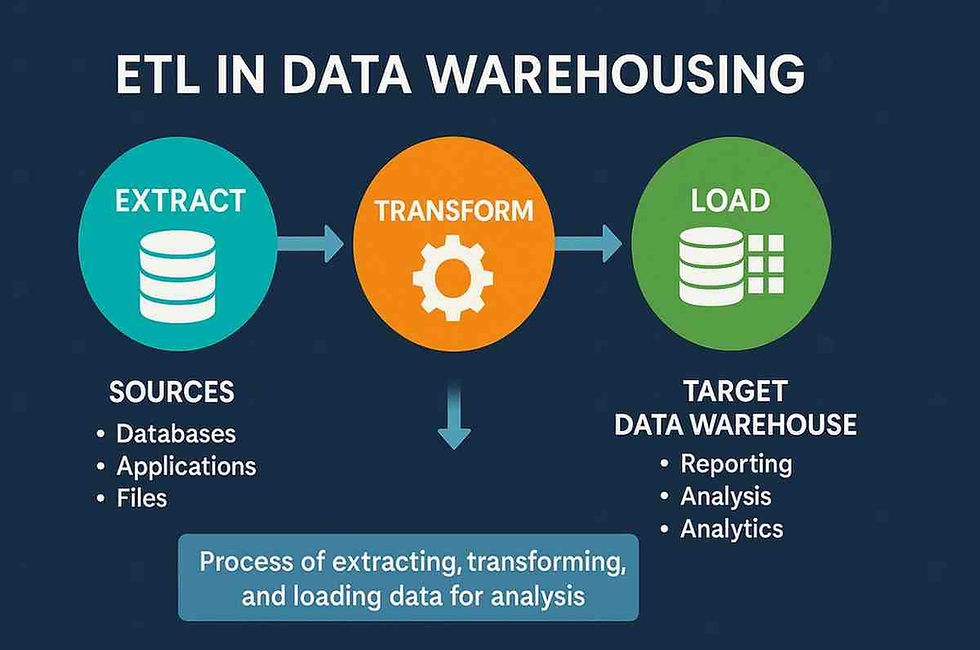

ETL Testing - Validating data transformations

Security Testing - Ensuring data protection protocols

Data Quality Monitoring in Snowflake

Q: How does Snowflake's native data quality monitoring work?

Data Quality Monitoring uses DMFs to continuously monitor the data quality metrics such as completeness, accuracy, uniqueness, and validity. This Enterprise Edition feature provides automated monitoring capabilities that track data health in real-time.

Q: What are Data Metric Functions (DMFs) and how do they work?

You can use Snowflake's provided system DMFs for common metrics such as row count, duplicates, and freshness. Alternatively, you can create your own custom DMFs tailored to your specific business requirements.

Q: What metrics should you monitor for data quality?

According to recent Snowflake documentation, key metrics include:

Completeness: Percentage of non-null values in critical columns

Accuracy: Data conformity to business rules and expected formats

Uniqueness: Duplicate record identification and management

Validity: Data format and range validation

Freshness: Data recency and update frequency tracking

Testing Strategies for Snowflake Data Warehouses

Q: What are the most effective testing strategies for Snowflake?

Simple data testing methods, such as schema or custom tests, allow you to validate your data hypotheses and ensure your code is operating correctly. Modern testing approaches include:

1. Schema Testing

Column existence validation

Data type verification

Constraint checking

Referential integrity testing

2. Custom Business Logic Testing

Revenue calculation validation

Customer segmentation accuracy

KPI consistency checks

Cross-table relationship verification

3. Performance Testing

Query execution time monitoring

Warehouse scaling validation

Concurrent user load testing

Resource utilization analysis

Q: Which tools are recommended for Snowflake testing?

You can turn to applications like dbt and Great Expectations to test data in Snowflake. Popular testing frameworks include:

dbt (Data Build Tool): Provides built-in testing capabilities with custom test creation

Great Expectations: Offers comprehensive data validation and profiling

Monte Carlo: Delivers data observability and anomaly detection

Datafold: Provides data diff and validation capabilities

Data Validation Methods and Best Practices

Q: What are the core data validation methods for Snowflake?

Effective data validation requires a multi-layered approach:

1. Source Data Validation

File format verification

Schema compliance checking

Data completeness assessment

Duplicate detection

2. Transformation Validation

ETL logic verification

Data mapping accuracy

Aggregation correctness

Business rule implementation

3. Output Validation

Report accuracy verification

Dashboard consistency checks

API response validation

Export file integrity

Q: How do you implement automated testing in Snowflake?

Automation is crucial for maintaining data quality at scale. Best practices include:

Continuous Integration/Continuous Deployment (CI/CD): Integrate testing into deployment pipelines

Scheduled Testing: Run data quality checks at regular intervals

Alert Systems: Configure notifications for test failures

Version Control: Track testing configurations and results

Performance Testing for Snowflake Environments

Q: Why is performance testing essential for Snowflake?

Performance testing ensures your data warehouse can handle expected workloads efficiently. Key considerations include:

Warehouse Sizing

Small: 1 compute cluster (up to 8 cores)

Medium: 2 compute clusters (up to 16 cores)

Large: 4 compute clusters (up to 32 cores)

X-Large and beyond: Exponential scaling capabilities

Query Optimization

Clustering key implementation

Partition pruning verification

Join optimization validation

Caching effectiveness measurement

Q: What performance metrics should you track?

Critical performance indicators include:

Query Execution Time: Average and peak response times

Warehouse Utilization: CPU and memory usage patterns

Concurrency Performance: Multi-user query execution efficiency

Data Loading Speed: Ingestion rate and throughput metrics

Security Testing in Snowflake

Q: How do you ensure data security in Snowflake testing?

Security testing validates access controls and data protection measures:

1. Authentication Testing

Single Sign-On (SSO) validation

Multi-factor authentication verification

User credential management

Session timeout testing

2. Authorization Testing

Role-based access control (RBAC) verification

Data masking policy validation

Column-level security testing

Row-level security implementation

3. Encryption Testing

Data at rest encryption verification

Data in transit protection validation

Key management testing

Secure data sharing validation

Common Challenges and Solutions

Q: What are the most common Snowflake testing challenges?

Based on industry experience, frequent challenges include:

1. Data Volume Handling

Challenge: Testing large datasets efficiently

Solution: Implement sampling strategies and parallel processing

2. Schema Evolution

Challenge: Managing changing data structures

Solution: Automated schema validation and version control

3. Performance Regression

Challenge: Detecting query performance degradation

Solution: Baseline performance tracking and automated alerts

4. Data Freshness

Challenge: Ensuring timely data updates

Solution: Implement data freshness monitoring and SLA tracking

Advanced Testing Techniques

Q: What are advanced testing techniques for Snowflake?

Modern data teams employ sophisticated testing approaches:

1. Data Profiling

Statistical analysis of data distributions

Pattern recognition and anomaly detection

Data quality scoring and trending

Automated data documentation

2. Synthetic Data Testing

Generated test data for development environments

Privacy-compliant testing scenarios

Edge case simulation

Scalability testing with realistic data volumes

3. Continuous Data Quality Monitoring

Real-time data quality dashboards

Automated alert systems

Data lineage tracking

Impact analysis for data changes

Frequently Asked Questions

Q: How often should I run Snowflake data quality tests?

The frequency depends on your data ingestion patterns and business requirements. For real-time data, implement continuous monitoring. For batch processing, daily or hourly testing is typically sufficient. Critical business data should be tested more frequently than reference data.

Q: What's the difference between data validation and data testing in Snowflake?

Data validation focuses on verifying data correctness against predefined rules, while data testing encompasses broader quality assurance, including performance, security, and functionality testing. Validation is a subset of comprehensive testing strategies.

Q: Can I test Snowflake data without affecting production performance?

Yes, Snowflake's architecture allows for testing without impacting production workloads. Use separate warehouses for testing, implement query resource monitors, and schedule tests during off-peak hours to minimize performance impact.

Q: How do I handle test data privacy and compliance?

Implement data masking and anonymization techniques for sensitive data. Use Snowflake's built-in security features like dynamic data masking and column-level encryption. Ensure test environments comply with regulations like GDPR and CCPA.

Q: What are the best practices for organizing test cases in Snowflake?

Organize tests by data domains, criticality levels, and testing types. Use version control for test configurations, maintain test documentation, and implement automated test execution pipelines. Group-related tests for efficient execution.

Q: How do I measure the effectiveness of my Snowflake testing strategy?

Track metrics such as data quality scores, test coverage percentages, defect detection rates, and resolution times. Monitor business impact metrics like report accuracy and decision-making confidence to assess overall effectiveness.

Conclusion

Snowflake testing is essential for maintaining data quality and ensuring reliable business intelligence. By implementing comprehensive testing strategies that cover data validation, performance monitoring, and security verification, organizations can maximize the value of their cloud data warehouse investment.

The combination of Snowflake's native testing capabilities and third-party tools provides a robust framework for maintaining data quality at scale. Regular testing, automated monitoring, and proactive issue resolution create a foundation for data-driven decision making.

Success in Snowflake testing requires a balanced approach that addresses technical requirements while supporting business objectives. Organizations that invest in proper testing frameworks see significant returns through improved data confidence, reduced errors, and enhanced operational efficiency.

Key Takeaways

• Implement Multi-layered Testing: Combine schema validation, data quality checks, performance testing, and security verification for comprehensive coverage

• Leverage Native Snowflake Features: Utilize Data Metric Functions (DMFs) and built-in monitoring capabilities for efficient quality management

• Automate Testing Processes: Integrate testing into CI/CD pipelines and implement scheduled monitoring for consistent data quality

• Focus on Business-Critical Data: Prioritize testing efforts on data that directly impacts business decisions and revenue generation

• Use Appropriate Testing Tools: Select tools like dbt, Great Expectations, or Monte Carlo based on your specific testing requirements and technical environment

• Monitor Performance Continuously: Track query execution times, warehouse utilization, and scaling performance to ensure optimal system operation

• Maintain Security Standards: Implement comprehensive security testing, including authentication, authorization, and encryption validation

• Document Testing Procedures: Create clear testing documentation and maintain version control for reproducible and scalable testing processes

• Track Testing Effectiveness: Measure success through data quality scores, defect detection rates, and business impact metrics

• Plan for Data Evolution: Design flexible testing frameworks that adapt to changing data schemas and business requirements

INDOVIP138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

Link INDOVIP138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138

indovip138