Guide to Delivery Pipeline in DevOps - Best Practices & Implementation

- Gunashree RS

- Jun 10, 2025

- 6 min read

In today's fast-paced digital landscape, organizations are constantly seeking ways to deliver software faster, more reliably, and with higher quality. Enter the delivery pipeline in DevOps – a game-changing approach that has revolutionized how teams build, test, and deploy applications. Whether you're a seasoned DevOps engineer or just starting your journey, understanding delivery pipelines is crucial for modern software development success.

What Is a Delivery Pipeline in DevOps?

A delivery pipeline in DevOps represents an automated sequence of processes that takes code from development through production deployment. Think of it as a well-orchestrated assembly line where each stage adds value and ensures quality before the software reaches end users.

The continuous delivery pipeline allows organizations to map a new path using relentless improvement to deliver customer value as needed, making it an essential component of modern DevOps practices.

This automated workflow typically includes several key stages:

Source code management and version control

Automated building and compilation

Comprehensive testing at multiple levels

Security scanning and compliance checks

Deployment to various environments

Monitoring and feedback loops

Core Components of an Effective Delivery Pipeline

Continuous Integration (CI)

Continuous Integration forms the foundation of your delivery pipeline. It automatically triggers when developers commit code changes, ensuring that new code integrates smoothly with the existing codebase. This process includes:

Automated builds that compile source code into executable artifacts

Unit testing to verify that individual components function correctly

Code quality analysis using tools like SonarQube or CodeClimate

Dependency scanning for security vulnerabilities

Continuous Delivery (CD)

Building upon CI, Continuous Delivery automates the deployment process to staging and production environments. By automating CI/CD throughout development, testing, production, and monitoring phases, teams develop higher quality code, faster and more securely.

Infrastructure as Code (IaC)

Modern delivery pipelines leverage Infrastructure as Code to ensure consistent environments across the entire deployment lifecycle. This approach treats infrastructure configuration as version-controlled code, enabling reproducible deployments and reducing environment-related issues.

Building Your First Delivery Pipeline: A Step-by-Step Approach

Step 1: Planning and Design

Before diving into implementation, map out your current development workflow and identify bottlenecks. Consider these essential questions:

What testing strategies will ensure quality?

Which environments need automated deployment?

How will you handle rollbacks and failures?

What security measures must be integrated?

Step 2: Tool Selection and Setup

Choose tools that align with your technology stack and organizational needs. Popular options include:

CI/CD Platforms:

Jenkins (open-source, highly customizable)

GitLab CI/CD (integrated with version control)

Azure DevOps (Microsoft ecosystem integration)

GitHub Actions (seamless GitHub integration)

CircleCI (cloud-native solution)

Container Orchestration:

Docker for containerization

Kubernetes for orchestration

Helm for package management

Step 3: Implementation Phases

Start with a simple pipeline and gradually add complexity:

Phase 1: Basic CI with automated builds and unit tests

Phase 2: Add integration testing and code quality gates

Phase 3: Implement automated deployment to staging

Phase 4: Add production deployment with approval workflows

Phase 5: Integrate monitoring and feedback mechanisms

Essential Best Practices for Delivery Pipeline Success

Fail Fast Philosophy

The most effective manner of setting up a DevOps Test strategy is to implement one that allows tests to "fail fast". This approach identifies defects early in the pipeline, preventing issues from propagating to later stages.

Version Everything

Maintain version control for:

Application code

Configuration files

Infrastructure definitions

Database schemas

Documentation

Implement Comprehensive Testing

Structure your testing strategy in layers:

Unit tests for individual components

Integration tests for component interactions

End-to-end tests for complete user workflows

Performance tests for scalability validation

Security tests for vulnerability assessment

Monitor and Measure

Track key metrics to continuously improve your pipeline:

Build success rates

Deployment frequency

Lead time for changes

Mean time to recovery (MTTR)

Change the failure rate

Common Challenges and Solutions

Challenge 1: Complex Legacy Systems

Many organizations struggle with integrating legacy applications into modern delivery pipelines. Address this by:

Creating adapter layers for legacy systems

Implementing strangler fig patterns for gradual modernization

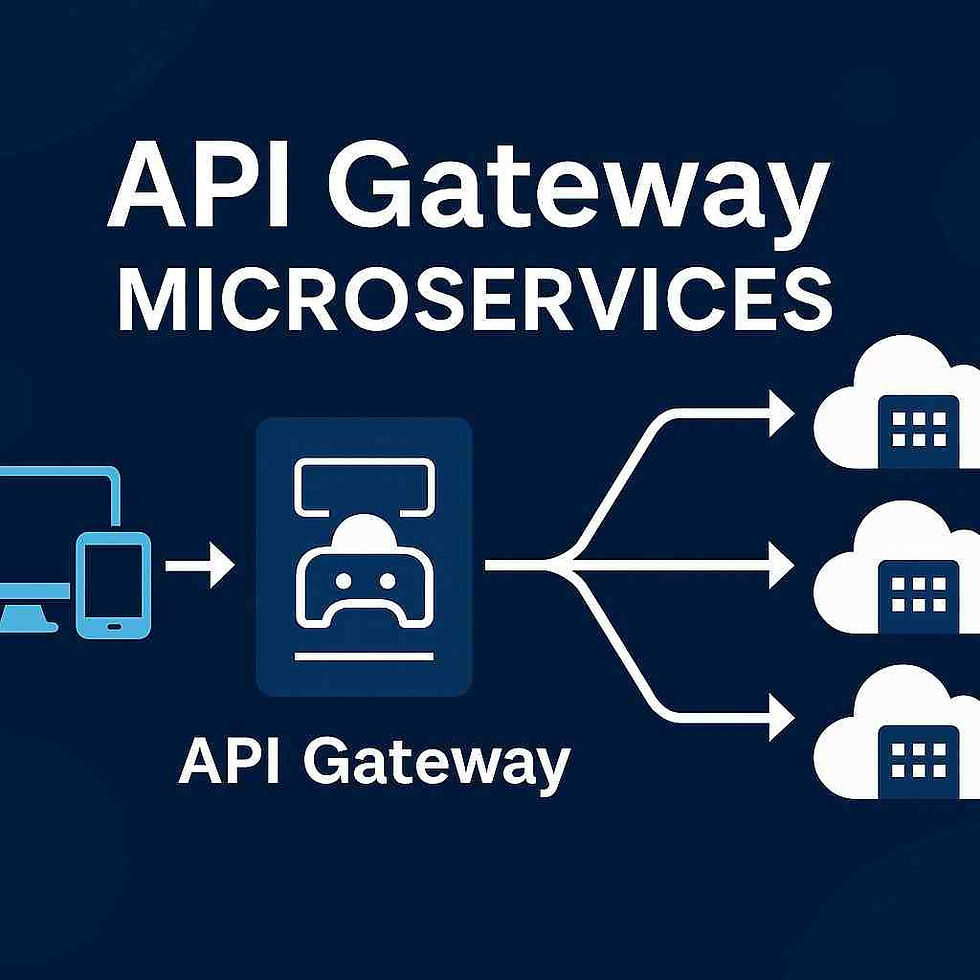

Using API gateways for seamless integration

Challenge 2: Security and Compliance

Balancing speed with security requires:

Implementing security scanning at every stage

Using policy-as-code for compliance automation

Creating secure secret management practices

Regular security audits and penetration testing

Challenge 3: Cultural Resistance

Overcoming organizational resistance involves:

Providing comprehensive training programs

Demonstrating quick wins and tangible benefits

Creating cross-functional collaboration opportunities

Establishing clear communication channels

Advanced Optimization Techniques

Pipeline Parallelization

Optimize build times by running independent stages in parallel. Teams can break down monolithic applications using frameworks and manage microservices with service meshes, enabling more efficient pipeline execution.

Artifact Management

Implement robust artifact management strategies:

Use artifact repositories like Nexus or Artifactory

Implement retention policies for storage optimization

Create immutable deployment artifacts

Maintain artifact traceability throughout the pipeline

Environment Management

Standardize environment provisioning using:

Container-based environments for consistency

Environment-specific configuration management

Automated environment cleanup and provisioning

Blue-green deployment strategies for zero-downtime releases

Measuring Success: Key Performance Indicators

Track these essential metrics to evaluate your delivery pipeline effectiveness:

Metric | Target Range | Impact |

Deployment Frequency | Daily to multiple times per day | Higher frequency indicates a mature pipeline |

Lead Time | Hours to days | Faster delivery of value to customers |

Change Failure Rate | 0-15% | Lower rates indicate better quality processes |

Mean Time to Recovery | Minutes to hours | Faster recovery improves reliability |

Frequently Asked Questions

What is the difference between continuous delivery and continuous deployment?

Continuous delivery automates the entire release process up to production deployment, but requires manual approval for production releases. Continuous deployment takes this further by automatically deploying to production once all automated tests pass.

How long does it take to implement a delivery pipeline?

Implementation timelines vary significantly based on complexity, team size, and existing infrastructure. Simple pipelines can be set up in days, while enterprise-wide implementations may take several months to complete.

What skills are needed to manage delivery pipelines?

Essential skills include an understanding of version control systems, automation tools, cloud platforms, containerization, scripting languages, and security best practices. Team collaboration and problem-solving abilities are equally important.

How do delivery pipelines improve software quality?

Delivery pipelines improve quality through automated testing at multiple stages, consistent deployment processes, early detection of issues, and rapid feedback loops that enable quick fixes.

Can delivery pipelines work with legacy applications?

Yes, delivery pipelines can be adapted for legacy applications through incremental modernization approaches, API integration layers, and gradual migration strategies.

What are the most common pipeline failures?

Common failures include test failures, environment configuration issues, dependency conflicts, network connectivity problems, and insufficient resource allocation.

How do you secure delivery pipelines?

Secure pipelines through encrypted communications, secure credential management, regular security scanning, access controls, audit logging, and compliance automation.

What tools are essential for delivery pipelines?

Essential tools include version control systems (Git), CI/CD platforms (Jenkins, GitLab), containerization (Docker), orchestration (Kubernetes), monitoring tools, and security scanners.

Conclusion

Implementing an effective delivery pipeline in DevOps transforms how organizations develop and deploy software. By automating repetitive tasks, ensuring consistent quality checks, and enabling rapid feedback loops, delivery pipelines become the backbone of successful DevOps initiatives.

The journey to pipeline mastery requires careful planning, gradual implementation, and continuous improvement. Start small, focus on automation, and gradually expand your pipeline capabilities as your team gains confidence and expertise.

Remember that the most successful delivery pipelines are those that evolve with your organization's needs while maintaining focus on reliability, security, and speed. Invest time in proper setup, monitoring, and optimization to realize the full potential of your DevOps delivery pipeline.

Key Takeaways

• Start Simple: Begin with basic CI/CD and gradually add complexity as your team matures

• Automate Everything: Reduce manual intervention through comprehensive automation strategies

• Implement Fail-Fast Testing: Detect and fix issues early in the development cycle

• Monitor Continuously: Track key metrics to identify improvement opportunities

• Security First: Integrate security scanning and compliance checks throughout the pipeline

• Version Control All Assets: Maintain versioning for code, configurations, and infrastructure

• Foster Collaboration: Break down silos between development and operations teams

• Measure Success: Use data-driven approaches to optimize pipeline performance

• Plan for Scalability: Design pipelines that can grow with your organization's needs

• Invest in Training: Ensure team members have the necessary skills for pipeline management

DevOps practices depend heavily on continuous integration and automated testing pipelines. Ensuring security in these processes helps teams maintain reliability. Having the best total adblock phone number allows users to resolve issues quickly, preventing unnecessary interruptions. Reliable systems keep operations streamlined and protect digital environments from unwanted risks.

after reading an article about java frameworks, started thinking how important it is to have a structured approach in anything you do. recently, to take a break from coding, tried a game on https://playluckyjet.net/ and noticed that even in gambling you need your own "framework" — strategy and risk analysis. and when I switch to https://chicken-road-in.com/, I catch myself thinking that success there also needs a sequence of actions, almost like designing app architecture. and that feeling, when both in games and programming you realize that the right choice of "tools" affects the result, is really surprising.

Great guide on optimizing the delivery pipeline in DevOps! Just like a seamless CI/CD workflow ensures faster and more reliable deployments, having a dependable and transparent property partner is essential when managing real estate. At visit here, we follow a similar philosophy streamlining every step of the property journey with certified professionals, upfront pricing, and 24/7 availability. Whether you're buying, selling, or renting, you deserve a process that’s as smooth and efficient as a well-implemented DevOps pipeline. Highly recommend checking out Property Store for a modern, hassle-free property experience.